Hi!

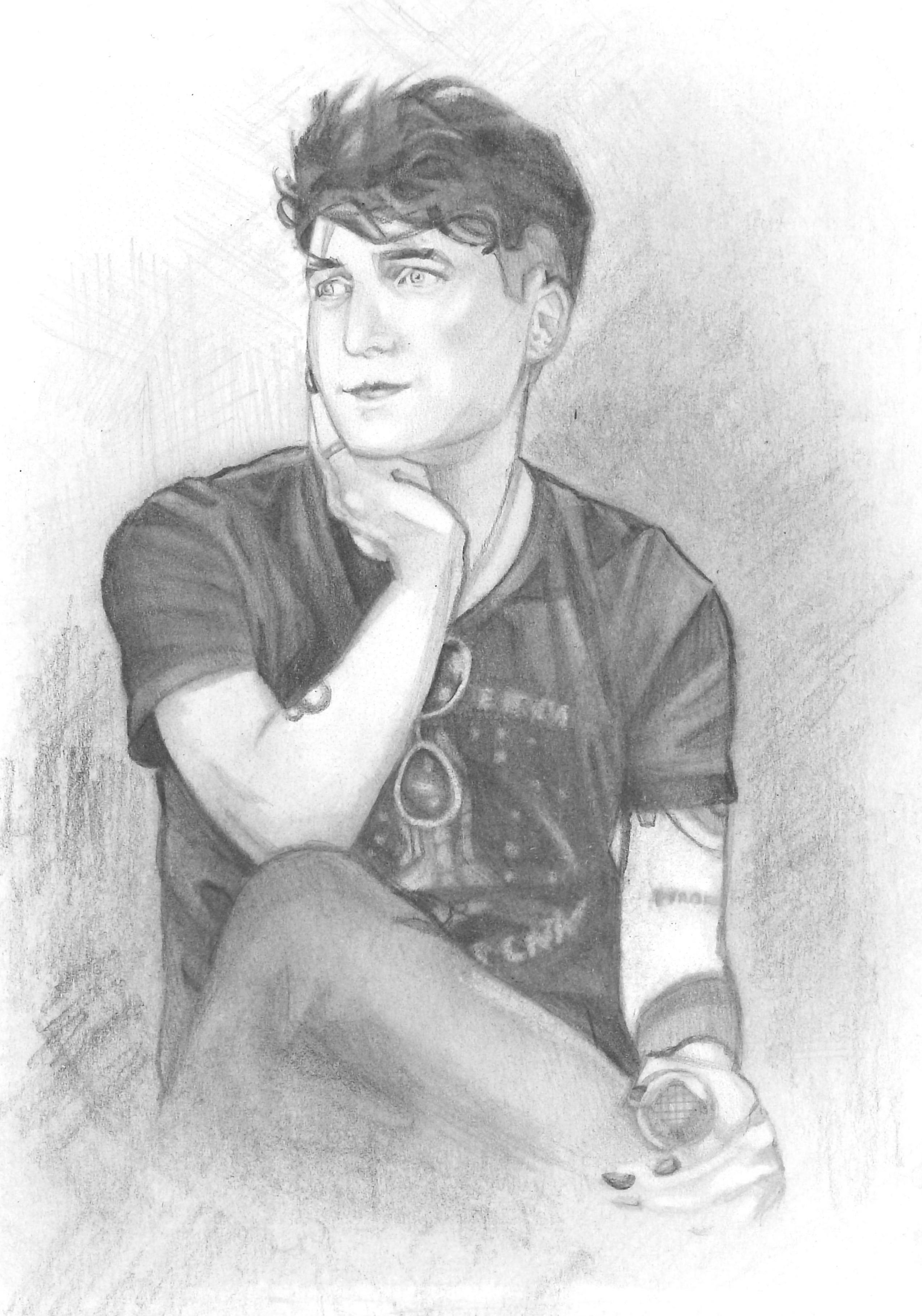

I’m Os Keyes, a PhD Candidate at the University of Washington’s Department of Human Centred Design & Engineering, where I mostly work on myself.

When I am not working on myself, I study (very generally) gender, disability, technology and/or power. I want to understand the role technology plays in constructing the world, and how we might construct a better one. Specific research questions I’m interested in or working on include:

- How do facial recognition systems encode and inscribe notions of race and gender? For that matter: what notions?

- What role does science and biomedicine play in measuring or authenticating trans existences?

- What implications does artificial intelligence have for disability in general, and autism in particular?

- How does classification shape people? How do those people resist that shaping?

My main focus right now (and my thesis project) is number 2, which has shifted into: exploring the past, present and future of gender-affirming medicine and the politics of scientific efforts to demonstrate its effect on patients. You can read more about it on the page about my dissertation research.

Answering these questions involves too many books and influences (and methods, and disciplines). Right now I’m enjoying Rosalyn Diprose, Ian Hacking, Amia Srinivasan, Ken Plummer, Hil Malatino, Kristie Dotson, Renée Fox, Rahel Jaeggi, and Havi Carel. Aside from all of that, I am supported by and an inaugural winner of the Ada Lovelace Fellowship, write occasionally for Wired, Vice and a few other popular venues, and teach in Seattle University’s honours program for fun. In my spare time, I volunteer as the Biomedical Archivist at the University of Washington, processing interesting archive collections and making them available for future and other scholars.

I can be contacted for random questions, speaking engagements, threats, complaints and offers of high-value cheques at okeyes @ uw . edu. Please note that my title of address is Mx, not Miss, Mrs or Mr (or Dr, yet!) - unless, of course, you’re one of my students, in which case Professor will do :).