- Note: this was originally a piece commissioned for/by * Real Life * magazine, and can be found on their website here *

Every few months, a story comes across my Twitter or Instagram or Facebook (yes, I’m old) featuring a range of Ziggy Stardust-esque faces held up as the way to defeat facial recognition. And every few months, when I see this, I roll my eyes so hard they almost fall out of my head. That’s because this approach — sometimes called “CV dazzle” (CV as in “computer vision”) or “dazzle makeup” — is about as useless as it is popular among researchers, journalists, and influencers.

It might seem as though this story recurs because of some ongoing arms race between algorithm developers and makeup artists who adapt to each other’s strategies (as these stories suggest). But CV dazzle has never worked as an intervention; it has always been too detached from the realities of society to be of much use for those most vulnerable to surveillance. And its popularity — and the popularity of ideas like it that imagine defeating the machines solely with personal interventions — serve mostly as a distraction from the kind of organized action that could better resist facial recognition.

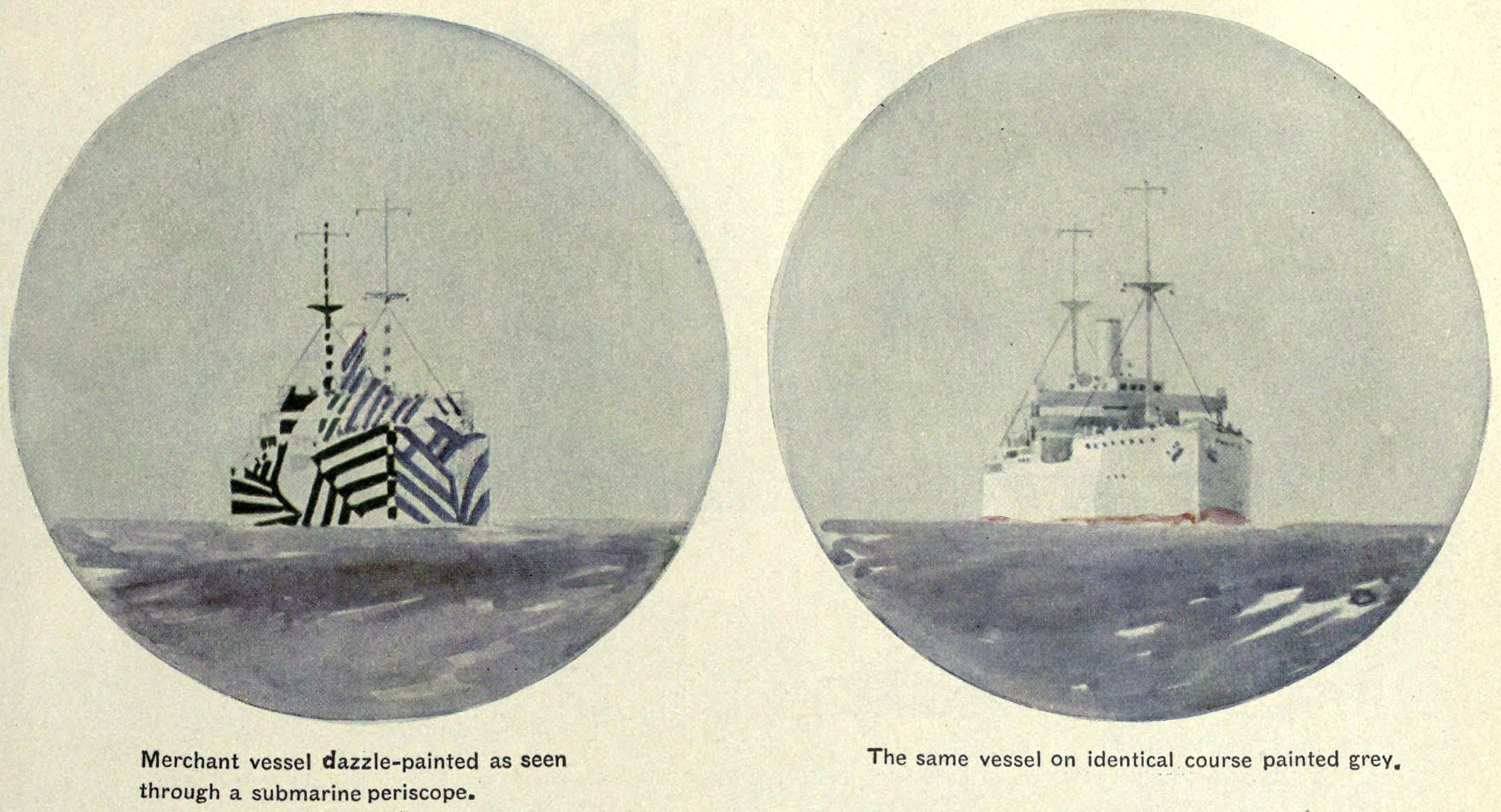

The term dazzle originally had had nothing to do with faces but warships. During World Wars I and II, naval doctrine assumed that warfare would be based on and around battleships: the vast, floating hunks of steel with guns so big a person could get lost inside one. Given the slow rate of reloading and the vast damage a direct hit would do, the most important thing in battleship fights became which could land a shot first. Since the guns had such long ranges, it took measurable time for shots to reach their target, so it was vital, for offensive purposes, to accurately measure the opponent’s distance, speed, and direction before firing. From a defensive perspective, it was just as vital to complicate and confound the opponent’s efforts to ascertain those pieces of data.

Enter dazzle. First proposed by artist John Graham Kerr, the idea was to repaint ships in such a way as to confuse the human eye. A collection of sharply contrasting angled lines painted on ships would likely make them more visible, but at the same time it would make it much more difficult for those human eyes to sense which direction the ships were going in. With dazzle camouflage, the enemy could see you, but they couldn’t hit you.

Whether this technique ever actually worked is hotly debated, but with the shift toward aircraft (and later missiles) in naval warfare and the rise in electronic sensors and range finding, it didn’t matter: Dazzle fell into disuse after the 1940s. That is, until the concept reappeared in the 21st century as a way to baffle the enemy of facial recognition technology.

Dazzle goes computational

It’s no surprise that ongoing efforts are being made to defeat or confound facial recognition technology. It is a scourge I’d quite like to stake through the heart, decapitate, and scatter at a crossroads. Many other people feel similarly, even if they’d phrase it more diplomatically. As Phil Agre put it way back in 2001, the problem of facial recognition technology is that it “will work well enough to be dangerous, and poorly enough to be dangerous as well.” That is, in working, it subjects the public to unjustified surveillance, sorting, and control, and that in failing more often with respect to certain groups, it subjects already marginalized members of society to additional scrutiny and violence.

Of course, faces aren’t ships, and algorithms aren’t seamen, but just like mid-20th century rangefinders, facial recognition technology works through contrast: identifying the edges that tell you the shape of a face, the line of the nose, the angle of the jaw. Hence the idea of dazzle makeup: If you fuzz the contrasting edges of a face through, say, contrasting bands of color, the facial recognition algorithms would be thwarted.

CV dazzle has been variously credited to Adam Harvey, Zach Blas and a range of other artists, each of whom has their own stated reasons for developing it. For Harvey, CV dazzle serves a direct, material purpose, aiming to “mitigate the risks of remote and computational visual information capture and analysis under the guise of fashion.” Blas’s framing is more theoretical; his masks are designed for use during “public interventions and performances” that aim to increase the public’s scrutiny of facial recognition. And in some respects they seem to have been successful: The idea of “surveillance makeup” or “dressing for the surveillance age” has received coverage in Vogue, the New Yorker, and a range of other publications and reappeared myriad times in social media posts. In many of these cases, the ongoing deployment of facial recognition technology it simply accepted as a given.

These posts capitalize on what should seem paradoxical: CV dazzle is a highly photogenic and attention-grabbing strategy for people who are ostensibly trying to become less noticeable. That is, it renders users invisible to algorithms by making them more visible to humans, which makes it effective as a fashion statement but counterproductive with regard to the hybrid human-computer surveillance infrastructure we’re actually confronting.

It’s worth remembering that dazzle on ships didn’t make them invisible; it enhanced their visibility so as to make it difficult to interpret. In other words, it ultimately drew attention to itself. The same goes with CV dazzle, in turn. It enhances one’s visibility so as to make it hard for machines to interpret. But here the analogy with dazzle camouflage breaks down: On ships, dazzle was meant ultimately to fool the human operators who aimed the guns. But does CV dazzle do much to fool the people who are ultimately deploying the facial-recognition technology?

Picture this scene: You’re walking down the street and you see someone in hallucinogenic makeup with a reverse mullet and bright blue ears. You might reasonably conclude, Oh great, someone snuck LSD into the Gathering of the Juggalos, or Oh dear, someone snuck LSD into my morning coffee, or perhaps When did the QAnon Shaman get out of jail? But whatever your conclusion, you’re drawing a conclusion. That’s because that person is visible to you — hypervisible, in fact. The very markings that are intended to confound algorithms simultaneously highlight those faces for human observers as (hyper)visible, as other, as there. And that’s a problem, because facial recognition systems aren’t just a matter of algorithms in decontextualized, hypothetical situations.

In popular culture, when people think of facial recognition, they tend to think SHODAN — i.e., some vast, intangible, utterly artificial behemoth of surveillance and control that haunts our steps. In other words, they tend to imagine a monolithic and purely computational villain. But as I’ve previously noted, facial recognition is a whole infrastructure and assemblage of technologies, processes, and people. There’s the algorithm, sure — but there are also the cameras and their (increasingly immortal) storage systems, the observers who identify how to respond to anomalies or positive matches, and the law enforcement officers who carry out that response. There are the technologies themselves, and then there are the “street-level bureaucrats”: the people who decide what to do with and around the technologies.

In the abstract, CV Dazzle could be very good at defeating the technologies. But in practice, it throws up a whole host of new problems caused by precisely the visibility that makes it functional. Can you go into work looking like that? Will it draw the eye of police officers on the ground? Will it draw the eye of CCTV operators — the people monitoring cameras and determining which observed situations to call police to intervene in? Will someone react to the failure-to-match error that CV dazzle is designed to trigger? And how easy is it to toggle on and off to account for those situations in which a match is desired — in which one needs to be recognizable simply in order to go to work?

The common answers to those questions are largely unsettling. Moreover, the answers that pertain to you likely depend on who you are. Facial recognition technology reinforces existing, ongoing relations of violence and control in society that are disproportionately applied to black and brown bodies, trans bodies, disabled bodies. This is part and parcel not only of the technology itself - through both its well-documented biases and its dependence on the idea of “threats” as visually other, exceptional, or monstrous - but also of the overarching systems that deploy the technology. Police patrols didn’t need facial recognition technology to engage in discriminatory profiling, stop-and-search tactics and murder; racist CCTV operators didn’t need it to trigger those patrols’ (discriminatory) deployment. Street harassment and workplace discrimination were already endemic before facial recognition, and the infrastructures that sustained all that discrimination and violence is not being replaced by facial recognition tech but augmented by it. Those humans are still present. And in rendering one invisible to an algorithm, dazzle makeup renders one hyper-visible to a cop. The result is that at best, dazzle makeup might be viable for a very narrow tranche of white people who are given the benefit of the doubt and assumed to be on their way to a Marilyn Manson concert. But for everyone else, it doesn’t reduce the exposure to harm; it heightens it.

Diagnosing dazzle

None of this is news to Harvey, Blas or any of the other originators of CV dazzle; their projects are art, and exist (as much of art, and much of the queer theory that inflects their work does) as a provocation. And they’re interesting provocations, at that - they highlight precisely the ambiguous nature of visibility that I’m talking about. But much of the uptake of the idea; its coverage, or less generously, its [recuperation](https://en.wikipedia.org/wiki/Recuperation_(politics) - treats CV dazzle instead as a practical, purposeful project, raising all of the questions mentioned above.

This, then, is what really interests me: not only CV dazzle’s obvious uselessness on a day-to-day basis, but why (given this obviousness) it has been so appealing to so many people. Mainstream media outlets hardly go out of their way to profile political art projects or interventionist approaches to resist the state, after all, and it’s doubly rare to see anti-surveillance activists and CNN on the same page.

A big part of it, it seems to me, is that it is … challenging without being challenging. That is, it promises a form of resistance and action that does not actively destabilize our notions of what resistance and action look like: something individualized, something physical, something direct. We live in a world that is simultaneously (and not unrelatedly) highly individualized and increasingly unstable. Many people feel increasing uncertainty and helplessness, that the things they took for granted are false, that their futures are closing off before their very eyes, and that they lack any control over (and are alienated from) their lives.

In such an environment, CV dazzle is appealing because it promises the fantasy (likely the vicarious fantasy — I’ve yet to see many people actually use it) of control, and of action. It’s something you can do unilaterally. But it’s further appealing because it is familiar; it doesn’t require people to change anything fundamental about their orientation to the world. Its idea of what resistance looks like — individualized, atomized — coheres with what neoliberal culture so loves. Invulnerability and security are understood as states that should be aspired to and can be achieved through personal responsibility alone. Ironically, it is the same mind-set that underpins the advocacy of using Ring cameras for security: All you need is this one neat trick (or app), and you can be protected from the chaos brought on, in part, by the neat-trick-oriented approach to social change.

In other words: CV dazzle offers us a way to address the consequences of what many of us believe without requiring us to address the complicity of those beliefs: namely (and paradoxically) the simultaneous belief that existence is an individualized state of being and that our own experiences can be generalized and mapped on to those of others. Its promises don’t challenge the individualization of society; they don’t challenge the contrasting bloated hegemony of law enforcement; they do not denaturalize any of these seemingly natural states of affairs. With dazzle makeup, we can be unfamiliar to algorithms while remaining familiar to ourselves. More substantive change — and more effective change — would require that denaturalization. It demands an increase in uncertainty that is more than many feel they can bear.

But that denaturalization is scary does not mean it is not necessary. The obsession with security and surveillance-based forms of social control contribute to the atomization of society and the paranoid, suspicious helplessness associated with it, which in turn reproduces and intensifies the surveillance obsession. It all stems from an inability to push back meaningfully and collectively against those forms of control. Individual solutions are premised in part on mistrust, on the refusal to rely on anybody other than oneself, on security coming through the control of vulnerability. Those are exactly the same premises, as Erin Gilson so meaningfully argues, that underpin the very logics anti-surveillance activists (and/or racial justice activists, feminist activists, and many other movements) are fighting against.

What we need is not individualized forms of resistance that work only for those people with the fewest reasons to worry in the first place. What we need is a plurality and diversity of tactics, actions and organizations, at street level, in legislative hearings, in protests, that exist within an ecology of resistance. That is, interventions should be clearly recognized as mutually reinforcing, mutually shaping, and made up of a million people working not on their own but in collectives, communities, together. If this involves street protests under the eyes of surveillance systems, well, we already have a tool for evading those that doesn’t require you to break the bank at Sephora. It’s called a mask, and frankly, you should probably be wearing one anyway.